论文阅读笔记:“Keeping Your Eye on the Ball”

Keeping Your Eye on the Ball: Trajectory Attention in Video Transformers(NIPS 2021)

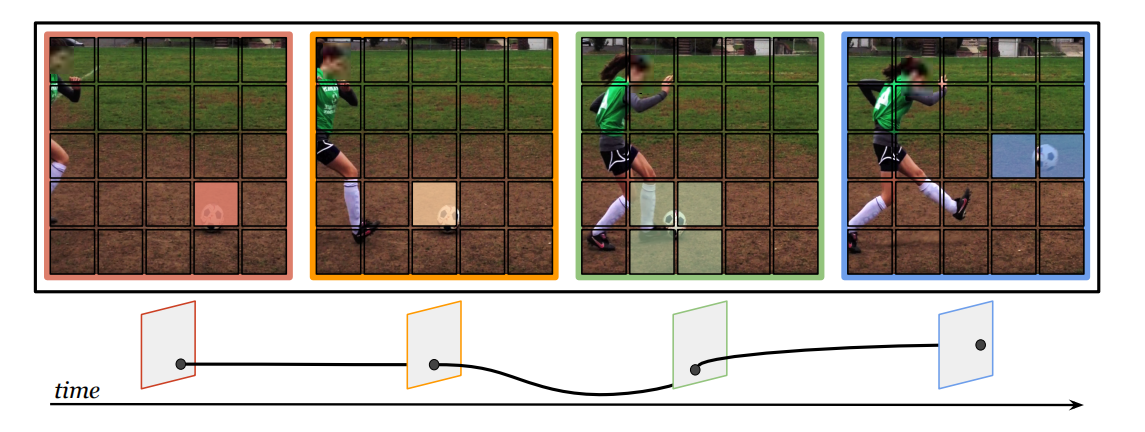

main idea: pooling along motion trajectories would provide a more natural inductive bias for video data, allowing the network to be invariant to camera motion.

首先预处理视频,生成ST个tokens,使用了cuboid嵌入来聚合不相交地时空块。之后在特征中加入了时间位置和空间位置编码,最后将可学习的分类token加入到整个序列中。

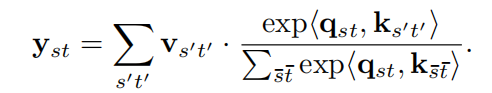

video self-attention

joint space-time attention

- 计算量是平方级别的

divided space-time attention(time transformer)

- 优点是计算量下降到

或 - 模型分析时间和空间是独立的(不能同时对时空信息进行推理)

- 处理大量冗余信息,而且不灵活、不能充分利用有效tokens

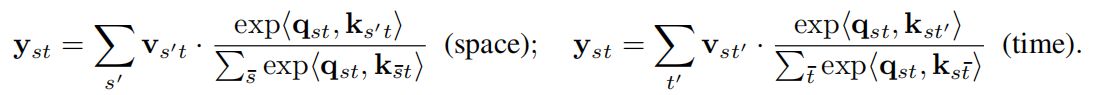

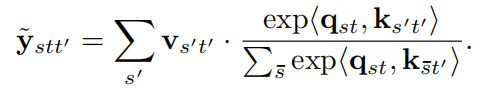

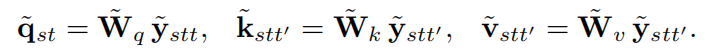

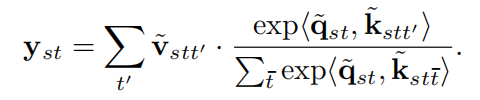

trajectory attention

- better able to characterize the temporal information contained in videos

- aggregates information along implicitly determined motion paths

- aims to share information along the motion path of the ball

- 应用在空间维度,且每个帧是独立的

- 隐式地寻找每个时刻轨迹的位置(通过比较query, key)

trajectory aggregation

- 应用在时间维度

- 复杂度:

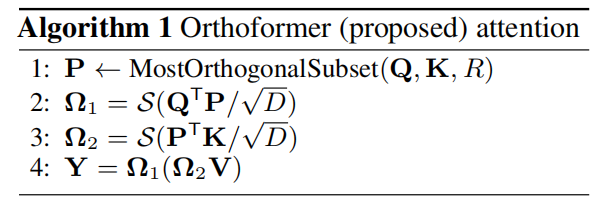

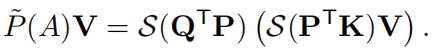

Approximating attention

基于原型的注意力近似:

- 使用尽可能少的原型的同时重建尽可能准确的注意力操作

- 作者从queries, keys中选择最正交的R个向量作为我们的原型

因为最大复杂度为$RDN

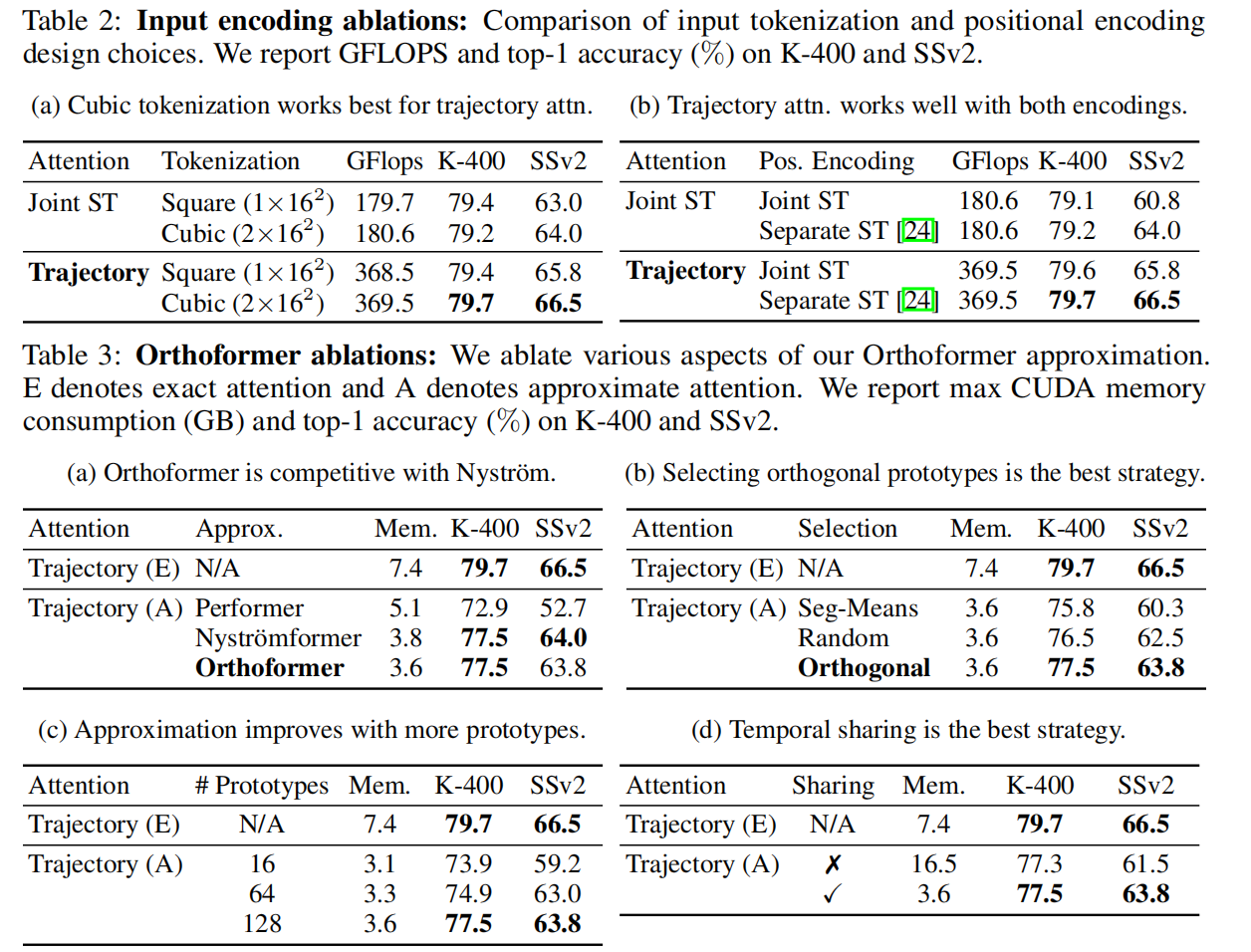

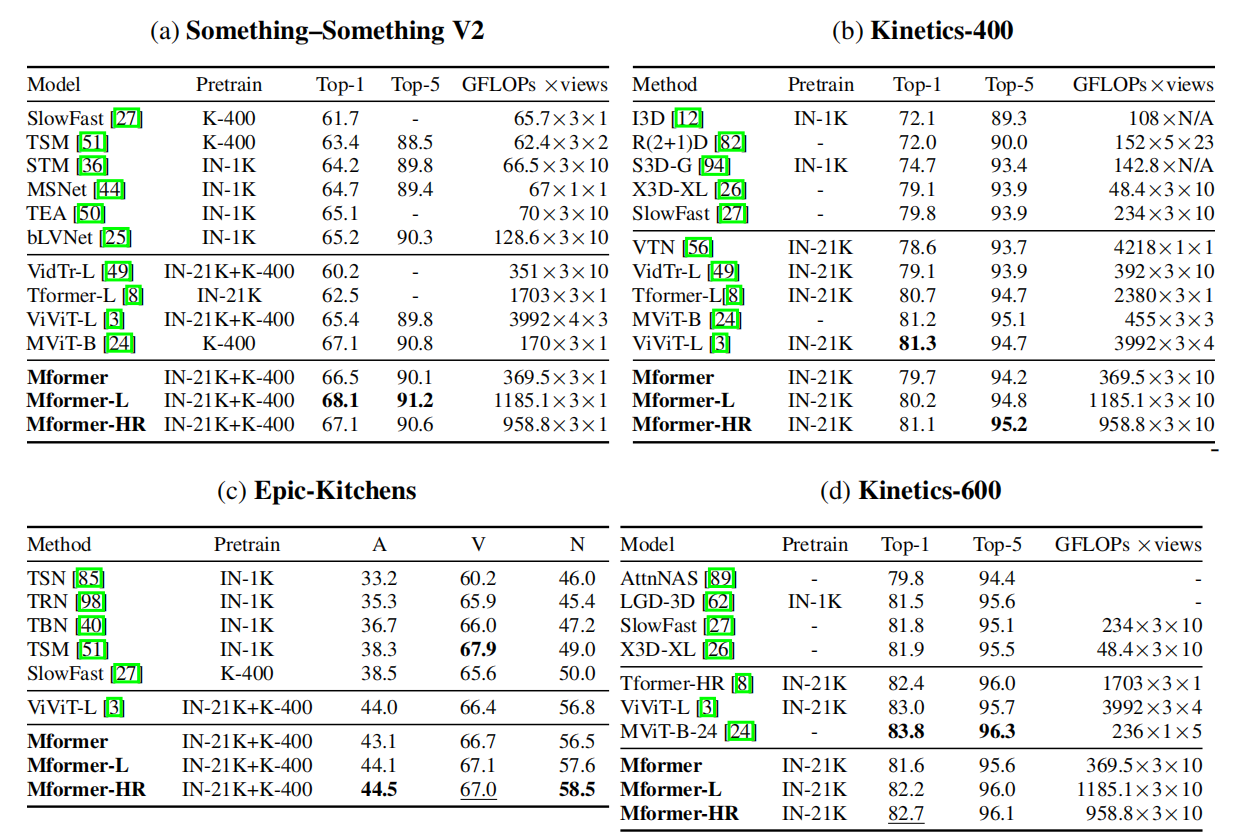

实验

- Post title:论文阅读笔记:“Keeping Your Eye on the Ball”

- Post author:sixwalter

- Create time:2023-03-13 00:00:00

- Post link:https://coelien.github.io/2023/03/13/paper-reading/paper_reading_062/

- Copyright Notice:All articles in this blog are licensed under BY-NC-SA unless stating additionally.

Comments